Texture Classification using tactile sensors

January 2022 - March 2022Use frequency domain and time domain data from record needle to classify textures (full report)

Team members: Devesh Bhura, James Avtges

Overview

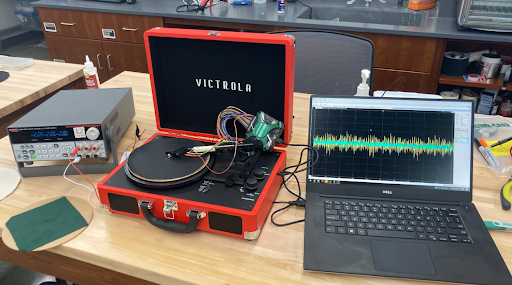

This project deals with enhancing the knowledge about tactile sensing with a low cost effective sensor to classify different textures. The new approach was to use a record needle to generate voltage signals by running a texture underneath the needle. Feature selection and extraction was done on the data from the right and left channel of the record needle, and then the textures were classified.

This experiment was conducted on 11 different textures. Pre-processing of the data included conducting an FFT and PCA to get features purely in the frequency domain, and also manually selecting features from the raw time series data. The best results were seen on the purely frequency domain method of feature selection and they are: Extra Trees classifier - 99.7 % test accuracy; Random Forest Classifiers - 99.7 % test accuracy; Support Vector Machines - 99.8% test accuracy; Gaussian Naive Bayes - 99.0% test accuracy; kd - tree nearest neighbor - 99.4% test accuracy. The results are comparable with results on existing research although over lesser number of samples per texture. Future work includes experimenting over larger number of samples per texture, more number of textures and potential for live classification for surface finishes.

Results

While running the classifiers, the data set was split into training and testing with a 70:30 split. The classifier was run with different sets of randomly generated splits in training and testing data over 1000 iterations and the average results are presented below for each of the classifier used, after optimizing hyper-parameters. C is the regularization parameter that decides the degree of importance given to miss-classification in SVMs. The gamma parameter decides how far the influence of a single training example reaches. For the kd nearest neighbor, the leaf size is the number of examples in each subset being considered for the tree. Estimators for the tree based classifiers represents the number of trees in the classifier, and maximum depth is how deep the layers of the tree can be.

- Extra Trees (158 estimators with max depth of 15): 99.5%

- Random Forest (53 estimators with max depth of 41): 99.1%

- SVM (linear kernel with C=211 and gamma = 0.01): 99.3%

- Gaussian Naive Bayes: 95.7%

- k-Nearest Neighbor (leaf-size of 1 and 4 nearest neighbors): 95.6%

Link for report

For full report, please check this out